This blog presents a new toolkit to assist in Dimensioning of MySQL Cluster.

The toolkit consists of two parts:

- ndb_record_size

- a spreadsheet to assist in calculating the amount of storage needed etc.

ndb_record_size is a program that calculates the amount of storage (IndexMemory, DataMemory, Disk space (for disk data table)) that is needed for a particular table in a database. The program takes into account the storage overhead needed when calculating the record size.

There are two versions of the spreadsheet, one for MySQL Cluster 6.2, and one for MySQL Cluster 6.3. The main difference is that the spreadsheet for MySQL Cluster 6.3 takes into account compression of LCP and Backups if you chose to enable that.

The spreadsheet uses the information given by ndb_record_size and you can determine how many data nodes you need, the amount of IndexMemory/DataMemory that is needed etc.

Please note that these tools are not Supported by MySQL Support. However, I have used them with a number of customers where they have proven very successful.

Compiling ndb_record_size

The ndb_record_size program needs to be compiled (let me know if problems exists on Supported platforms other than Linux) before we can use it:

- tar xvfz ndb_record_size.tgz

- cd ndb_record_size

- (edit the Makefile to change "INSTALL_DIR". If you are using the Configuration Tool, and are using the default INSTALL path there, you don't have to do anything)

- make

To run it:

> export LD_LIBRARY_PATH=/usr/local/mysql/mysql/lib/mysql:$LD_LIBRARY_PATH

> ./ndb_record_size

This will print out some help information how the program should be executed:ndb_record_size <connect> <database> <table>

- <connect> - the host where the management server is running

- <database> - name of the database where the <table> is stored

- <table> - the name of the table to dimension

Before we can run the program to dimension the tables, we need to have the tables created in storage engine=ndb.

Migrating existing MyISAM/Innodb database

If you have an already existing database in MySQL (e.g in MYISAM, Innodb) that you would like to migrate to MySQL Cluster you can do:

>mysqldump --no-data > databasedump.sql change the engine=MyISAM or engine=INNODB to engine=ndb, e.g by:

> cat databasedump.sql | sed -e 's/MyISAM/NDB/' > databasedump-ndb.sql> use the mysql client and do "source databasedump-ndb.sq"

Best practice using ndb_record_size is to provision the database with reference data.

The reason for this is to be able to calculate storage correctly needed by VAR* attributes.

If you have no reference data, then ndb_record_size will default to maximum length of the VAR* attribute.

E.g, if you have a column "email varchar(255)" then the size of the record will be calculated with email having a column length of 256B. However, in real-life an email address is e.g mostly often not that long, but the average could be actually be e.g only 64B.

If you populate using reference data, then ndb_record_size will calculate the size of the record with the average size for each varchar/varbinary attribute over max 1000 records.

If you have 5 records for reference data, it will average over those 5 records. If one record exists, it will take that.

Using reference data you will get more exact information on how much storage you will need!

CREATE TABLE `t1` (

`a` int(11) NOT NULL,

`b` bigint(20) DEFAULT NULL,

`c` char(21) DEFAULT NULL,

`d` varchar(22) DEFAULT NULL,

`e` bit(1) DEFAULT NULL,

PRIMARY KEY (`a`),

KEY `b` (`b`)

) ENGINE=ndbcluster DEFAULT CHARSET=latin1

and a few variants of that one. I have not inserted any reference data (bad practice).I then run the ndb_record_size program in this table.

./ndb_record_size localhost test t1

Calculating storage cost per record for table t1:

--- WARNING! No reference data found for VAR*. Defaulting to max size..23 Bytes

Consider loading database with average data for exact measurement

--- Ordered Index found named (PRIMARY). Additional cost per record is = 16 bytes of DataMemory.

--- Ordered Index found named (b). Additional cost per record is = 16 bytes of DataMemory.

Record size (incl OH):

#Rows found=0 records (--A required)

#OrderedIndexes=2

#UniqueHashIndexes=0

#blob/text=0

DataMemory=137 bytes

IndexMemory=20 bytes

Diskspace=0 bytes

Appending the following to test.csv

test,t1,0,2,0,0,20,137,0

As you can see the ndb_record_size program recommends me to populate with average data/reference data in order to calculate a good size of the VAR* attribute. It also prints out how many ordered indexes, and unique indexes you have on this table.Finally, ndb_record_size appends the storage required to a csv file called

I run the program a couple of more times to size all the four tables I have created in the test database:

./ndb_record_size localhost test t2

./ndb_record_size localhost test t3

./ndb_record_size localhost test t4I then look at the output of the test.csv file:

>cat test.csv

test,t1,0,2,0,0,20,137,0

test,t2,0,2,1,0,40,140,0

test,t3,0,0,0,0,20,179,0

test,t4,0,1,0,0,20,196,0

Using the dimensioning spreadsheet

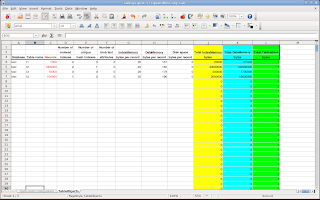

The information in the test.csv file is pasted/imported into the sizing spreadsheet.

As you see in the picture below, I have also added how many records I will have in each table.

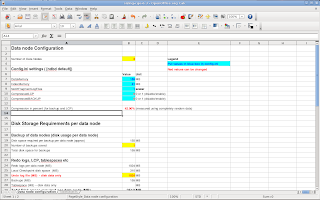

When you have added how many records you will have in each table then you can navigate to the tab "Data node configuration". What that tab looks like is shown below:

As you can see there are two fields, DataMemory and IndexMemory.

These fields (and NoOfFragmentLogFiles etc) are automatically derived from the data provided in the tab "TableObjects".

The values in "red" can be altered. Values in "blue" boxes should be transferred to the [ndbd default] section of the config.ini.

E.g, you can now change the number of data nodes (they must be even 2,4,6...) and the storage needed for each data node is then updated in the spreadsheet.

Thus, you can see how many data nodes you need to handle a particular database size.

When you have determined how many data nodes you need to have by checking that the "Total RAM per data node" and the "Total Disk space per data node" you can transfer these values into your config.ini file and restart the cluster.

If you have generated a configuration using the Configuration Tool then you can do like this:

1. cd mysqlcluster-XY

2. edit config/config.ini

3. update the config.ini and save and exit

4. If you have changed "NoOFragmentLogFiles" you must run (in order to recreate the redo log on disk with the new size)

4.1. sh rolling-restart-initial.sh

Or if you haven't:

4.2. sh rolling-restart.sh

The above operation is online, meaning you can have traffic while performing this step.

If you have changed the number of Data Nodes then it is not possible to update the configuration online (yet). You must generate a new configuration, e.g by using the Configuration Tool, with the correct number of data nodes! Don't forget to stop the old cluster before installing the new cluster!

6 comments:

Using 5.1.23-cluster 6.2

Under ubuntu x64

g++ -c -Wall -fno-rtti -fno-exceptions -march=x86-64 -I/usr/local/mysql//include/mysql -I/usr/local/mysql//include/mysql/storage/ndb/ ndb_record_size.cpp -I/usr/local/mysql//include/mysql/storage/ndb/ndbapi ndb_record_size.cpp

g++ -Wall -march=x86-64 -L/usr/local/mysql//lib/mysql ndb_record_size.o -lndbclient -o ndb_record_size

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_strdup'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_sleep'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_charset_bin'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_malloc'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_end'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_gethostbyname_r'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `get_charset'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `base64_needed_decoded_length'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_snprintf'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `base64_decode'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `decimal_bin_size'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_thread_init'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_fstat'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_init'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `strnmov'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_thread_end'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_stat'

/usr/local/mysql//lib/mysql/libndbclient.so: undefined reference to `my_no_flags_free'

...fixing it.

Kitai

ested with 5.1.22 and crashes too.

So, i added this to g++ line in Makefile

-lmysqlclient -lmysqld

and now works.

Thanks a lot for the utility. You just cannot imagine how useful this will be until Mysql Dev people implements autoincrement tablespaces ;-)

Kitai

Hi,

thanks for you comments.

I have updated the Makefile.

In MySQL Cluster 6.3 a problem with linking has been fixed so it is not necessarily to link with anything else than libndbclient (b/c it contains all relevant symbols). However this is not the case with MySQL Cluster 6.2.

I agree with you that autoextending table spaces would make a lot of difference. But you can always add more data files to a table space, and this can be done online!

BR

Johan

The file is no longer there. :(

The requested URL /downloads/dim/ndb_record_size.tgz was not found on this server.

There is a new version of the dimtoolkit called sizer. Please check the link at the top of the page or go here

Good luck,

johan

Post a Comment