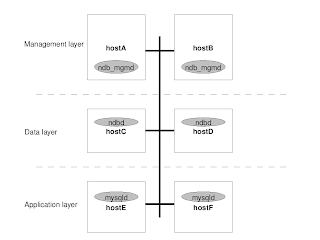

In

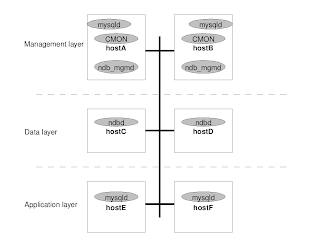

part 1 we got the cluster monitoring up and running. Indeed a good step forward into taming Cluster. This time we are going to add process management to our solution - if a node fails (ndbd, ndb_mgmd, mysqld etc) fails, then it should be automatically restarted.

To do this we need:

- initd scripts for each process so there is a way to start and stop the processes.

- process management software (i have chosen monit for this).

The initid scripts presented below are templates and you may have to change things such as paths.

For monit we do not need init.d scripts as we will install monit in inittab so that the OS (I live in a Linux world so if you use Solaris or something this may not necessarily apply in that environment).

initd scripts - walkthroughWe need initd scripts for the following processes:

- ndb_mgmd - (scripts open in new tab)

In this script you should change:

host=<hostname where the ndb_mgmd resides>

configini=<path to config.ini>

bindir=<path to ndb_mgmd executable>

If you have two ndb_mgmd, you need to copy this file to the two computers where you have the management server and change the hostname to match each computer's hostname.

- ndbd

If you run >1 ndbd on each computer use the ndbdmulti script.

In this script you should change:

host=<hostname where the ndbd runs>

connectstring=<hostname_of_ndb_mgmd_1;hostname_of_ndb_mgmd_2>

bindir=<path to ndbd executable>

##remove --nostart if you are not using cmon!!

option=<--nostart>

##if you use ndbdmulti you need to set the ndbd node id here (must correspond to Id in [ndbd] section of your config.ini.

nodeid=<nodeid of data node on host X as you have set in [ndbd] in config.ini>

If you use ndbdmulti, then you need to have one ndbdmulti script for each data node you have on a host, because each ndbdmulti script must have its unique nodeid set. Thus you have e.g /etc/initd/ndbdmulti_1 and /etc/initd/ndbmulti_2.

- mysqld

In this script you may want to change the following things to reflect your configuration.

basedir=<e.g /usr/local/mysql/>

mycnf=<path to my.cnf>

bindir=<e.g /usr/local/mysql/bin/>

- cmon

In the initd script for cmon you can change the following things.

However, it is recommended to have a mysql server and a management server on the same host/computer as where cmon is running.

bindir=<by default cmon is installed in /usr/local/bin>

## the following libdir covers the most common places for the mysql libraries, otherwise you must change so that you have libndbclient on the libdir path.

libdir=/usr/local/mysql//mysql/lib/mysql:/usr/local/mysql//mysql/lib/:/usr/local/mysql/lib/:/usr/local/mysql/lib/mysql

OPTIONS - there are a bunch of cmon options you might have to change as well. By default cmon will try to connect to a mysql server on localhost, port=3306, socket=/tmp/mysql.sock, user=root, no password, and to a managment server (nbd_mgmd) on localhost

install init.d scriptsYou should copy the scripts to

/etc/init.d/ on the relevant computers and try them before moving on!

If all scripts are tested and ok, Then you need to add the scripts to the runlevels so that when the computers reboot they are started automatically.

# on the relevant host(s):

sudo cp cmon /etc/init.d/

# on the relevant host(s):

sudo cp ndb_mgmd /etc/init.d/

# on the relevant host(s):

sudo cp ndbd /etc/init.d/

# on the relevant host(s):

sudo cp mysqld /etc/init.d/On Redhat (and I presume Fedora, OpenSuse, Centos etc):

# on the relevant host(s):

sudo chkconfig --add cmon

# on the relevant host(s):

sudo chkconfig --add ndb_mgmd

etc..

On Ubuntu/Debian and relatives:

# on the relevant host(s):

sudo update-rc.d cmon start 99 2 3 4 5 . stop 20 2 3 4 5 .

# on the relevant host(s):

sudo update-rc.d ndb_mgmd start 80 2 3 4 5 . stop 20 2 3 4 5 .

# on the relevant host(s):

sudo update-rc.d ndbd start 80 2 3 4 5 . stop 20 2 3 4 5 .

etc..

Reboot a computer and make sure the processes start up ok.

If you are going to use

cmon then

cmon will control the start of the data node. If the data nodes are started with

--nostart the data nodes will only connect to the management server and then wait for

cmon to restart the data node.

monit - buildThe first thing we need to do is to build monit on every computer:

download monitunpack it

tar xvfz monit-4.10.1.tar.gzcompile

cd monit-4.10.1./configuremakesudo make installI think you have to do this on every computer (I have not tried to copy the binary from one computer to every other computer).

Now when monit is installed on every computer we want to monit it is time to define what monit should monitor. Basically monit checks a pid file. If the process is alive, then great else monit will restart it. There are many more options and I really suggest that you read the

monit documentation!

monit - scriptsmonit uses a configuration file called

monitrc. This file defines what should be monitored. Below are some examples that I am using:

- monitrc for ndb_mgmd, mysqld, and cmon - (scripts open in new tab)

You should copy this script to /etc/monitrc:

sudo cp monitrc_ndb_mgmd /etc/monitrc

and change the permission:

sudo chmod 700 /etc/monitrc

Then you should edit monitrc and change where the pid files are (it is likely you have different data directories than me):

check process ndb_mgmd with pidfile /data1/mysqlcluster/ndb_1.pid

etc..

- monitrc for ndbd

If you have >1 ndbd on a machine, use monitrc for multiple ndbd on same host

You should copy this script to /etc/monitrc:

sudo cp monitrc_ndbd /etc/monitrc

and change the permission:

sudo chmod 700 /etc/monitrc

Then you should edit monitrc and change where the pid files are (it is likely you have different data directories than me):

check process ndbd with pidfile /data1/mysqlcluster/ndb_3.pid

etc..

- monitrc for mysqld - (scripts open in new tab)

You should copy this script to /etc/monitrc:

sudo cp monitrc_mysqld /etc/monitrc

and change the permission:

sudo chmod 700 /etc/monitrc

Then you should edit monitrc and change where the pid files are (it is likely you have different data directories than me and hostnames):

check process mysqld with pidfile /data1/mysqlcluster/ps-ndb01.pid

etc..

monit - add to inittabThe final thing is to add

monit to

inittab so that if monit dies, then the OS will restart it!

On every computer you have to do:

echo '#Run monit in standard run-levels' >> /etc/inittabecho 'mo:2345:respawn:/usr/local/bin/monit -Ic /etc/monitrc' >> /etc/inittabmonit will log to

/var/log/messages so tail it check if all is ok:

tail -100f /var/log/messagesIf not then you have to change something :)

If it seems ok, then kill a process that is managed:

killall -9 ndbdand monit will automatically restart it:

Sep 30 13:15:44 ps-ndb05 monit[4771]: 'ndbd_3' process is not running Sep 30 13:15:44 ps-ndb05 monit[4771]: 'ndbd_3' trying to restart Sep 30 13:15:44 ps-ndb05 monit[4771]: 'ndbd_3' start: /etc/init.d/ndbd_3 Sep 30 13:15:44 ps-ndb05 monit[4771]: 'ndbd_7' process is not running Sep 30 13:15:44 ps-ndb05 monit[4771]: 'ndbd_7' trying to restart Sep 30 13:15:44 ps-ndb05 monit[4771]: 'ndbd_7' start: /etc/init.d/ndbd_7 Sep 30 13:15:49 ps-ndb05 monit[4771]: 'ndbd_3' process is running with pid 5680 Sep 30 13:15:49 ps-ndb05 monit[4771]: 'ndbd_7' process is running with pid 5682 So that should be it. If you have done the things correctly you should now have a MySQL Cluster complete with monitoring and process management. As easy way to accomplish this is the use the

configuration tool, which generates the various scripts (initd, build scripts etc)!

Please let me know if you find better ways or know about better ways to do this!